Every day, law enforcement agencies face a monumental task. Specifically, how can they protect communities with limited resources? Traditionally, policing has been a reactive practice; officers respond to incidents after they occur. But what if technology could shift the paradigm from reaction to prevention? Imagine if data could tell us where and when crimes are most likely to happen. Consequently, this would allow for a more proactive and efficient approach to keeping people safe. This isn’t a scene from a sci-fi movie. Instead, it’s the burgeoning reality of AI public safety.

Artificial intelligence is making its way into squad cars and command centers. As a result, it’s transforming the core functions of law enforcement. By analyzing vast datasets, AI promises to identify crime patterns and predict future hotspots. Furthermore, it helps agencies allocate their resources with surgical precision. The most discussed, and debated, aspect of this revolution is predictive policing AI. This article will dive deep into the world of AI public safety. We will explore how these technologies work, their real-world applications, and the critical ethical tightrope we must walk. Ultimately, we’ll see how data analysis is fundamentally changing the face of modern.

Your New Creative Partner is an AI

Beyond the Hype: What is AI Public Safety?

At its core, AI public safety is the application of artificial intelligence technologies to enhance the effectiveness and efficiency of law enforcement and emergency services. It’s not about robot officers or dystopian surveillance states; it’s about using powerful data processing to augment human expertise. This field stands on two main pillars: predicting where crime is likely to occur and optimizing how resources are deployed to prevent it.

The goal is to move from a “pins-on-a-map” model to a dynamic, forward-looking strategy. Instead of just seeing where crimes have happened, agencies can now forecast where they are likely to happen. This predictive capability allows for smarter patrols and community engagement efforts, potentially deterring crime before it even takes place. A key engine driving this transformation is predictive policing AI, a tool that is both powerful and fraught with complexity.

The Engine of AI Public Safety: Predictive Policing AI

Predictive policing AI uses machine learning algorithms to analyze historical crime data. It looks for statistical patterns related to the type of crime, location, and time of day. By identifying these correlations, the system can generate forecasts about which areas have an elevated risk of specific criminal activity during a particular timeframe. For example, an algorithm might identify a neighborhood that consistently experiences a spike in car break-ins on weekend evenings.

This isn’t a crystal ball; it’s statistical probability. The system doesn’t predict that a specific person will commit a crime. Instead, it flags small, specific geographic areas—often as small as a few city blocks—as “hotspots.” The core idea of this AI public safety approach is to give officers a data-backed reason to be present in a certain area, creating a deterrent effect and enabling faster response times if an incident does occur.

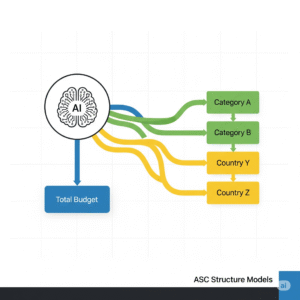

Smarter Deployment: AI-Powered Resource Allocation

The other side of the AI public safety coin is intelligent resource allocation. Knowing where crime might happen is only half the battle; getting the right resources there at the right time is just as critical. AI can analyze patrol officer locations, traffic patterns, and real-time incident reports to suggest the most efficient deployment strategies.

For instance, if multiple calls come in from different parts of a city, an AI system can recommend which units should respond to which incidents to minimize travel time and ensure optimal coverage across all precincts. This goes beyond just crime, extending to a full emergency response AI framework. It ensures that whether it’s a medical emergency, a fire, or a crime in progress, resources are managed with maximum efficiency, which is a core tenet of modern AI public safety.

Real-World Impact: How AI Public Safety is Deployed Today

The theoretical benefits of predictive policing AI are compelling, but how does this technology perform in the real world? Numerous police departments have piloted and implemented these systems, yielding a wealth of data on their effectiveness and challenges.

The applications extend beyond just predicting street crime. AI is being used to analyze gang activity, identify patterns in financial fraud, and even help in cold cases by finding new connections in old evidence. The common thread is the use of data to uncover insights that would be invisible to the human eye, a foundational principle of AI public safety.

Use Case 1: Proactive Patrols to Reduce Property Crime

Many agencies have used AI public safety tools to tackle recurring issues like burglary and auto theft. By identifying hotspot areas, departments can direct “preventative patrols” to these specific locations. The visible presence of law enforcement can act as a powerful deterrent. In several cities that have adopted these strategies, reports have shown a measurable decrease in these types of property crimes in the targeted zones, demonstrating a direct and positive outcome of predictive policing AI.

Use Case 2: Optimizing Emergency Call Response

In large, congested cities, getting to the scene of an emergency quickly can be a matter of life and death. Some emergency services are using AI to analyze real-time traffic data, road closures, and the location of their vehicles to find the fastest possible route to an incident. This application of AI public safety isn’t about predicting crime, but about optimizing the response when a crime or emergency is already happening. This can lead to shaved minutes off response times, which can make all the difference in a critical situation.

Pioneers and Platforms: Case Studies in AI Public Safety

The landscape of AI public safety is shaped by both the law enforcement agencies that adopt these tools and the tech companies that develop them. Examining both provides a clearer picture of the technology’s potential and its real-world application.

Case Study 1: The LAPD and PredPol One of the most well-known early adopters of predictive policing AI was the Los Angeles Police Department (LAPD), which used a tool called PredPol (now Geolitica). The software analyzed historical crime data to generate daily predictions of crime hotspots. The goal was to direct patrols to these areas to deter crime. While the department reported some success in reducing crime rates in certain divisions, the program also faced significant criticism from civil liberties groups and community activists over concerns about bias and over-policing, highlighting the complex social challenges inherent in AI public safety.

Case Study 2: ShotSpotter and Acoustic Surveillance ShotSpotter is another example of a company at the forefront of AI public safety technology. Their system uses a network of acoustic sensors to detect, locate, and alert law enforcement to gunfire in real time. AI algorithms filter out other loud noises (like fireworks or car backfires) to ensure accuracy. This technology allows police to respond to gunfire incidents much faster, often even when no one calls 911. It’s a powerful tool for improving response times and collecting evidence, but it also raises questions about surveillance and data privacy, which are central to the debate around predictive policing AI.

AI Design Thinking Just Got a Superpower

The Critical Debate: Ethics and Bias in AI Public Safety

The power of AI public safety comes with immense responsibility. Using historical data to predict future crime is a practice loaded with ethical risks, primarily concerning algorithmic bias and data privacy. A truly effective and just AI public safety framework must confront these challenges head-on.

The Ghost in the Machine: Algorithmic Bias The most significant challenge for predictive policing AI is bias. AI systems learn from the data they are given. If historical crime data reflects past biases in policing—for example, if certain neighborhoods have been disproportionately patrolled and thus have more arrests on record—the AI will learn this bias. It will then recommend sending more officers back to those same neighborhoods, creating a feedback loop that can lead to over-policing and the unfair targeting of minority communities. Acknowledging and actively working to mitigate this bias is the single most important task in the ethical implementation of AI public safety. For more on the broad societal impact of AI, research from institutions like McKinsey provides deep analysis.

Privacy vs. Protection: The Data Dilemma To be effective, AI public safety systems require vast amounts of data. This raises legitimate concerns about privacy and surveillance. Where do we draw the line between collecting data for public safety and infringing on the privacy of citizens? A robust AI public safety policy must include strict regulations on what data can be collected, how it is stored, and who can access it. Transparency with the public about how these technologies are being used is essential to maintaining trust and ensuring accountability.

A Beginner’s Guide to Public Safety Data Analysis Tools

While frontline law enforcement uses specialized software, the principles of data analysis that power AI public safety can be understood and even practiced by analysts, researchers, and students using widely available tools. Understanding these tools can demystify the process.

- Tableau / Power BI: These are premier data visualization tools. A public safety analyst could use them to import crime data and create interactive maps showing hotspots, charts illustrating crime trends over time, and dashboards that combine multiple datasets for a comprehensive overview.

- MonkeyLearn: This is a no-code text analysis tool. It could be used to analyze thousands of unstructured incident reports to identify key themes or analyze community feedback from social media to gauge public sentiment about safety concerns.

- ChatGPT: While not a data analysis tool itself, advanced language models from developers like OpenAI can be incredible assistants. An analyst could use it to summarize long reports, write code in Python or R for statistical analysis, or brainstorm different community engagement strategies based on data-driven insights.

A Simple Workflow for Public Safety Data Analysis

- Define the Question: Start with a specific problem. For example: “Which three neighborhoods have seen the largest increase in petty theft over the past six months?”

- Gather the Data: Obtain anonymized incident data from a public portal or internal database.

- Clean and Prepare: Ensure the data is formatted correctly, with no missing values or errors.

- Analyze and Visualize: Use a tool like Tableau to map the incidents and filter by date and crime type. Create a bar chart showing the change in incident count per neighborhood over time.

- Derive the Insight: From the visualization, you can clearly identify the neighborhoods that meet your criteria. This insight, derived from a simple analysis, is the foundational step for any AI public safety initiative.

The Future of AI Public Safety: A Balancing Act

The journey of AI public safety is just beginning. As technology becomes more powerful, its potential to create safer, more efficient communities will only grow. However, the path forward must be paved with caution, transparency, and a steadfast commitment to equity and fairness. Predictive policing AI and other tools can be powerful allies in the mission to protect and serve, but they are not a replacement for community trust, human judgment, and compassionate policing. The future of AI public safety depends on our ability to strike the right balance between technological innovation and our core human values.